[YouTube] – [Personal Website]

- The presenter of this tutorial is Nils Remiers; he is the author of

sentence_transformersand he is a researcher at HuggingFace.

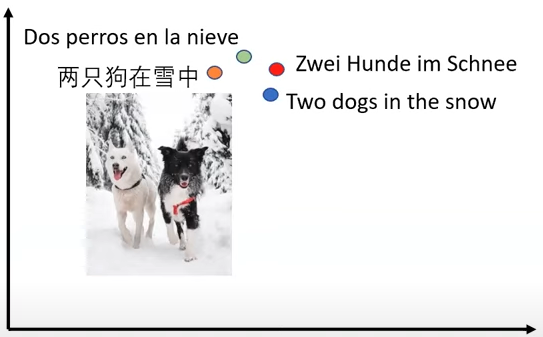

- Dense representations are interesting as they allow for zero-shot classification in the embedding space. This not only works for text embeddings, but multi-lingual and multi-modal as well.

- Using out-of-the-box embeddings (for example, averaging BERT embeddings or using GPT-3 embeddings) does not work (see [1], [2]).

-

Vector Space

The contrastive or triplet loss may only optimize the local structure. A good embedding model should both optimize global and local structures.

- Global Structure: Relation of two random sentences.

- Local Structure: Relation of two similar sentences.

Reference

- OpenAI GPT-3 Text Embeddings – Really a new state-of-the-art in dense text embeddings? | by Nils Reimers | Medium: This benchmarking was done in late December 2021, when the embedding endpoint was released not long.

- MTEB Leaderboard – a Hugging Face Space by mteb: As of 2023-10-12, the

text-embedding-ada-002ranks 14 in the benchmark. All of the first 13 models that rank higher are open-source models.